Improving the human-robot relationship, one successful conversation at a time

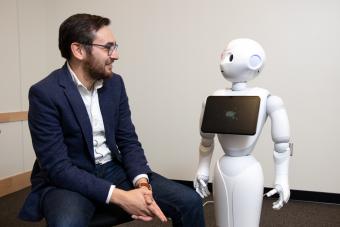

Should an autonomous robot ever ask for advice? Computer Science Assistant Professor Tom Williams is tackling that question and more in the MIRROR Lab at Mines.

A hospital robot annoys its human coworkers so much that they lock it inside a storage closet. Mall robots get overwhelmed by groups of abusive elementary school students.

Tom Williams has heard the stories.

For the assistant professor of computer science, though, the stories are more than just funny internet memes waiting to happen – they are examples of a failure in human-robot communication.

“Natural language is a great communication paradigm because it’s so flexible. It’s not a traditional interface where you have to physically interact with something — it doesn't require any specific hardware or any specific training,” Williams said. “But it also brings a lot of baggage with it. If a robotic system has natural language competence, people are going to expect that it is going to interact with them in a way that is humanlike. And that’s not always the case right now.”

In the MIRROR Lab, Williams and his team are focused on improving these human-robot interactions, combining perspectives and techniques from a wide variety of fields, including computer science, cognitive and social psychology, moral philosophy and philosophy of the mind. The goal is to find ways to build trust and rapport between intelligent robots and their human colleagues.

“Addressing the challenges that accompany natural language is especially critical in the domains that our group is working in, like astronaut-robot interaction. We’re not just deploying robots to give people social media notifications — we’re deploying robots to help people in space,” Williams said. “If these robots are acting in a way that’s inappropriate or that annoys people, then the people are just not going to use the technology and thus it’s not going to have the benefit that it was intended to have.”

Here are three questions that Williams’ group is tackling right now in hopes of making human-robot interactions as effective as possible.

1. Should an autonomous robot ever ask for advice?

On crewed mission to the Moon, astronauts may very well find themselves working alongside robots and artificial intelligence systems.

In all likelihood, those robots will be designed to operate autonomously, able to make decisions and take action on their own. Williams recently received a NASA Early Career Faculty Award to explore whether there might be situations where the robots should be less independent than they can be, in the name of building a stronger relationship with their astronauts.

“You can imagine this as a person — if you're making some decision, you have a bunch of different options available to you. You can just go ahead and make a decision and take that action. You can make a decision and let your supervisor know what you are doing. You can make a decision and ask for permission to do something. Or you can maybe ask for advice as to which of a couple of different options you have,” Williams said. “This project is looking at how robots can make this decision — going ahead and taking an action, which is going to be effective and not place additional mental workload burdens on their supervisors, versus asking for clarification or permission when they don’t really need to, in order to build trust and rapport.”

That performance of autonomy could vary based on the situation, location and to whom the robot is talking, he said. At the same time, researchers will also look at the performance of identity, or the “personalities” that a team of robots could take on for human benefit up in space.

“Each of these robots doesn’t really need to have a unique identity — they’re all part of the same network system,” Williams said. “We’re interested in, depending on different identity performance choices, how this affects people’s trust in the individual components of that system versus trust in the system as a whole.”

2. Could robots benefit from a lesson from Confucius?

So far, most of the work in robot ethics has been grounded in a single moral paradigm, deontological ethics, which focuses on the inherent rightness or wrongness of actions themselves.

It makes sense from a computational perspective, Williams said — computer scientists can easily program a list of norms a robot should never violate. But what if other moral paradigms could offer additional benefits for trust and rapport?

That’s the question that Williams, Mines Assistant Professor Qin Zhu and collaborators at U.S. Air Force Academy hope to answer by introducing role-based ethics into the robot equation.

“We’re interested specifically in how robots should be rejecting commands that they get when those commands are inappropriate,” Williams said. “The default way to do that would be you have some norm-based reasoning system where you have a set of moral norms. You’d say, ‘Oh, I can’t do this because it’s wrong,’ or ‘I know I can’t do this because doing X is forbidden.’

“But what if that rejection is grounded in your work relationships? What if you say, ‘Oh, I wouldn’t be a good teammate if I did that?’ Maybe it’s going to force them to reflect a little bit deeply on exactly why this action is unacceptable.”

Specifically, researchers are focusing on Confucian ethics, a role-based paradigm where morality is determined by how actions comply with or are benevolent with respect to the roles that people play and their relationships with other people.

Say, for example, a robot is teaching some students how to play a game, Williams said. One of the students steps out of the room to take a phone call and the other students ask the robot to do something impermissible, like giving them a hint to the game or, worse, rifling through the wallet of the other student.

“In both of those cases, you can reject the request in terms of it just being wrong or by appealing to relationships — you could say, ‘I wouldn’t be a good instructor if I gave you a hint for this game that I’m trying to teach you’ or ‘You’re not being a good student for asking for this hint while the other student is out of the room,’” Williams said. “Similarly, for the more severe violation, you can say, ‘Well, I wouldn’t be a good friend or you are not being good friends for answering this request or for making this request.’ That requires the robot to know what types of behaviors are viewed as benevolent when performing different types of roles.”

The team plans to develop algorithms that will allow robots to perform this type of role-based moral reasoning, as well as conduct psychological experiments to see just how effective the different command rejections are in various scenarios.

3. How should robots communicate with a busy co-worker?

Imagine that you’re working in an office and need to share some information with a co-worker who’s busy on another project. Do you knock on their door for a quick chat or do you send an email instead?

Williams and Leanne Hirshfield of the University of Colorado Boulder are collaborating to develop a tool that could allow robots to make similar communication decisions based on their human co-workers’ mental workload. That tool would connect augmented reality with functional near-infrared spectroscopy, a noninvasive brain measurement technology in which sensors on the subject’s head can detect which parts of the brain are most active based on the oxygenation of the blood.

“Dr. Hirshfield has done a lot of work in the past showing how you can measure general mental workload, how much mental work is going on, especially in the prefrontal cortex,” Williams said. “What we’re trying to do now is to see if we can specifically discriminate between different types of mental workload. Is this person under high working memory load, where they’re trying to remember a lot of things? Are they under high visual load, where their visual system is overloaded because they’re looking at a scene that has lots of distracting objects? Or are they very auditorily overloaded, because there’s a lot of distracting sounds?”

From that work, Williams hopes to build machine learning models that can automatically predict which communication method a robot should use, based on their human teammate’s workload type, to maximize performance and rapport.

In other words, should the robot draw a circle in the augmented reality headset around the item that they want the human to see, describe the item using natural language or use a combination of both?

“There’s actually some interesting trade-offs between using language and using AR,” Williams said. “Where when you're using AR to pick things out, it’s very effective obviously because you immediately know exactly what I’m talking about. But people don’t really like when robots communicate just using AR alone. They like it when it’s paired with natural language. But there could be different contexts in which you still might want to just use AR or just use language — for example, if you are very auditorily overloaded, if you’re already trying to listen for something and there’s a lot of distracting things, then it might not be great for the robot to use this long natural language expression because that’s just going to add to your overload, and you could miss whatever you’re trying to listen for.”